Legacy code. Lessons from a period without automated tests.

One of my first professional software development experiences was on a legacy codebase supporting core banking processes. It was a mix of Cobol and Java, and although there was a continuous attempt to migrate to the latest, by rewriting and encapsulating Cobol routines and screens with a Java wrapper, there were still numerous new developments being written in Cobol.

This is how I learnt this ancient magic, usually associated with dino-devs, where one single point could destroy your full logic flow. And to be honest, I liked it. It was different, it was interesting, and above all, it was code actually running important processes in real life. And I had to modify it and extend it.

One thing was to create new routines on Cobol. With a clear first requirement, new code is rarely unreadable. At least for the one writing it. Another thing was to dive into a 30-year-old routine that managed many things that you were not aware of, that were not documented, and that, of course, were not tested.

At that time, I didn’t really know better, but in hindsight, there were huge technical and organisational issues, which translated into enormous quality problems and that could be pinpointed to one fact: no automated tests.

Why do automated tests matter?

Automated tests are your safety net. They consist of executable code aiming to verify the behaviour of your software and should be embedded into your development process. They can run periodically, automatically, warning you if there’s any new error. They protect you. This is why they also allow you to build faster in the long run. They avoid rework.

In the best scenario, you create them while creating your software. In the worst case there’s none, and everytime you change something, you do it at your own risk, ready to crash your application or introduce new bugs. Automated tests allow you to verify that your changes didn’t break or change anything unexpectedly, they also behave as documentation of your software’s behaviour. It’s also a way of communicate between your team members, as they can ensure that other people correctly understand their intentions.

In this way, you can refactor safely, you can create new functionalities without fearing introducing bugs in the old ones. It’s not a burden, but really an enabler for your productivity.

The causes

Let’s take a look at a few common reasons why a project may end with a lack of automated test.

Organization divide

In software development, the common waterfall approach, where the different stages are followed one after each other: Requirements→ Analysis→ Design → Development → Testing → deployment → Maintenance. With, usually, different teams performing each of these steps. This methodology was largely used in the past and it’s been replaced by Agile methodologies. However, when such transformation hasn’t been yet implemented completely, or not done correctly, the historic organisation will still be visible. This translates in two separate teams, one that builds the software, known as the development (DEV) team; and another that tests it and ensures the quality, also known as the quality assurance (QA) team.

The typical waterfall flow would be that first, the development team writes the code, and when it deems that’s complete, they release it to the next team, the QA team. While the latter team is busy discovering the software, executing regression tests, creating new test scenarios, and manually executing a lot of work, the development team is busy with the next feature. This means that by the time the QA team discovers the issues, the development team has his attention completely shifted to a different task. So not only the fixes are more difficult to implement, because of this conceptual shift, but it also delays the release of the new features. Creating rework, frustration, unforeseen time consumption, and delay everywhere.

There is also a different problem that arises in this kind of setup. There’s an incentive, or at least a tendency, to confront the DEV team with the QA team. Where the formers just want to push code that passes through QA, even by concealing possible issues. And the QA team wants to chase the developers and find them the bugs they create, even if the impact is limited or controlled. This translates into lack of collaboration, issue avoidance, and hiding. Which, of course, not only creates a toxic work environment; it also hinders the overall Quality. This doesn’t mean that you shouldn’t have a QA team, but that you should rather embed part of the test creation along the building of your software. Don’t wait to start validating. Build your safety net as you move forward.

History. No test was ever written.

Another reason is that there was never an automated test created. This implies several things. First, there’s no culture among developers and there are no examples to look at, so if not actually requested, organisational inertia will just resist tests creation. Second, the code evolved, most probably, in a way that’s hard to test it: Hardcoded dependencies and properties; no modular designs with clear separation of responsibility and huge files that are hard to understand. The third reason I’ve experienced is that test get outdated. As they’re not maintained along with the software evolution, there might just be documented in text files, or spreadsheets, as a series of manual steps to be performed. And although you could think that it’s better than nothing, they may imply that the tests are not reliable, either because they don’t cover new functionalities or changes, or because they predict the software to behave somehow, that’s not really expected anymore. Adding more confusion than anything else.

As a good compass, if you’re doing manual testing from a text document, you will greatly benefit from automating steps and by tracking your test evolution.

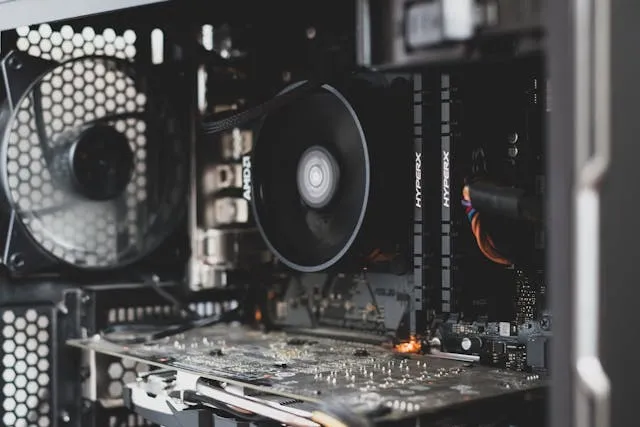

History. Legacy code

As mentioned before, code without tests will evolve in a way that’s hard to test. And with code that has lived for several years, that has been written by people long gone, with embedded knowledge that no one else really can explain. With time, bad practices appear along the way because as there are no tests, it’s cumbersome and risky to clean the code without breaking things. And this creates spaghetti code, lack of modularity, outdated comments, convoluted code, huge files, and so many antipatterns we see in so many codebases.

This is the weight of history. Because there wasn’t any safety net, it was too risky to keep improving the code and keep your software reliable.

No direction for implementing tests

Although it’s not a sufficient condition, it is necessary. In my experience, when there’s no manager nor architect nor senior developer promoting the implementation of automated tests, then it will simply never happen. This is because if it’s not really assumed that creating automated test must be a part of building software, then, it will be seen as a waste of time by those evaluating the capacity of the team, so the team will be asked to rather work on feature deliveries rather than on testing. This is, of course, a short-term view, but widespread.

Maintenance spiral decay

As the development team has to take time to fix issues and bugs. There’s less time to learn and proactively protect their future availability, by, for instance, implementing better test suites, improving their devops practices, or keeping up to date their knowledge about the tools and technology they use. In other words, if you don’t actively take time to take care of your code, team members, and processes, you’ll end up having less and less capacity as your team will be busy keeping the lights on, while technical debt keeps accumulating and your innovation capabilities go down. Not only innovation suffers, but it becomes increasingly difficult to deliver new features, to fix security issues, and ensure quality. This translates in growing maintenance and operational cost, while your deliverability capacity keeps decreasing.

How to start

There are more than these reasons that can explain why your software has no automated tests. Let us see now a few actions that you can take to start tacking this issue.

First of all, you must have the emotional and strategical strength to authorise your team to take time now, to have more time in the future. What exactly to do will depend on your actual situation. Do you have separate DEV and QA teams? Could they work together in the future? The goal here is to start with small and coordinated actions directed toward the same direction, creating your safety quality net.

For your quality team, they can start automating simple things first. Rest/Soap calls, stress testing scenarios, most common flows. By doing simple cases first, they can gain technical knowledge on the techniques and tools required. And if there’s no internal knowledge, you can think about training and bringing someone to work with your team for a short time. And here I repeat, to work with your team, to empower them, not to do the job for them. You may be tempted to gain some short-term benefits, but we need to think long-term here.

For your development team, start with unit test, i.e. tests asserting small,contained, pieces of code. They are the simplest ones for them, and it shouldn’t be difficult for the team to get a good grip on them. They are probably capable of doing it already, if they are not writing those test is probably because there is pressure to deliver quickly, in detrimental of it. After they have a good base, they can start tackling integration tests, where different functionalities and modules interact, and other more advanced tests.

And I know, if you’re working on a Legacy base code, it’s probably complex, convoluted, and hard to test. And your team is already busy with so many other things. That’s normal. The goal here is to be focused and not trying to create 100% test coverage, but rather to incrementally improve the quality, so after some time, all the gains accumulates.

So be strategic in your approach :

- Don’t write test for the sake of creating test. They should have a purpose and be focused.

- Don’t think it’s something you can do later, or that you can bring someone external to just create tests. Aim to embed the test creation in your software building process.

- If you receive a new bug, try the best you can to create an automated test that reproduces it. Also, during the fix of it, you may have to modify or pass by code also used somewhere else. Create small unit tests for such functions. Said otherwise, create unit tests while you move along the code.

- Are there common flows that your code supports? Create tests for them.

- Are there areas of the code that usually get you problems? Try creating test at them. They probably are not the simplest ones to fix, but your future self will thank you.

- As a bonus, there are static code analysis tools (Like SonarQube) that can help you find unreported bugs and improve overall code quality. You can use this report to fix them and create unit tests for that code.

Conclusion

Yes, creating tests is work. Especially if you’re just starting doing it, it may feel like boring, overwhelming, and unnecessary work that just slows your team down. But sooner than you expect, you’ll start gaining time and improve quality. Time that you’re not firefighting production issues, that you’re not fixing regression bugs and time that you can use to build new features, improve your process and create more value, for your team, and for your users or customers. Think in the long run, take the time now to have more later.